FACILITATE

FACILITATE

Formative Assessment using Computationally-driven Insights of Learner Intent via Transdisciplinary AI for Technology Education

Summary

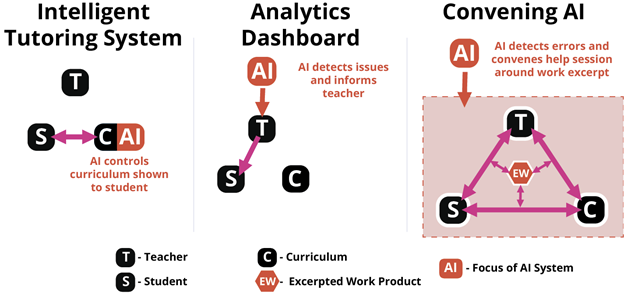

Intelligent Tutoring Systems and Analytics Dashboards have shown the potential of technology in education far beyond being a topic of instruction or method of delivery. Machine Learning and Artificial Intelligence (ML/AI) have long been employed in support of those roles. However, these approaches are strongly student- or teacher-facing; they do not focus on instructional interactions between instructors and students. FACILITATE explores an approach in which AI targets the entire instructional triangle: students, teachers, and curriculum content as a contextualized, interacting triad.

Contrasting models of AI roles under intended system usage. ITS AI is student-facing through curriculum. Dashboard AI is teacher-facing through information display. A convening approach brings students, teachers, and curricular context together around excerpts of student work-in-progress.

Specifically, we propose an AI-driven tool could bring students and teachers together around specific “moments” in student work, playing a convening role. This leaves intact the core of the triadic interaction, including instructors’ preferred methods, rapport, awareness of external circumstances, and special expertise, which are out of scope for course-level AI.

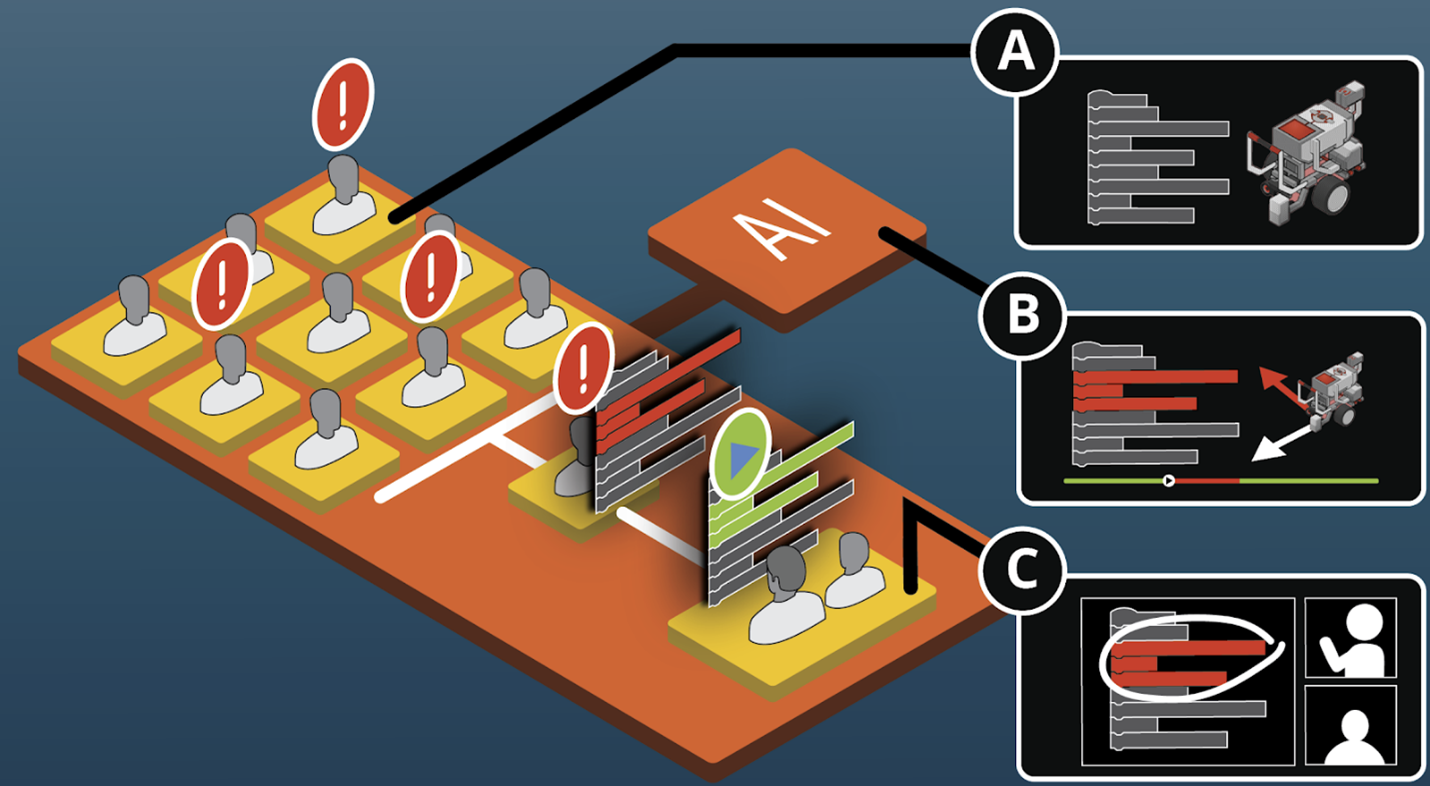

FACILITATE addresses the known and unknown technical and sociotechnical integration challenges of an AI-driven convener through design-based research, by developing a proof of concept Formative Assessment Suggestion Tool (FAST) in the context of middle school robotics programming. By examining patterns in student code and simulated robot output, a convening AI could identify students who are stuck, infer students’ intended solution pathway, locate the first point of divergence from a working solution, then brief the teacher and call a focused help session with the student. These map closely onto areas teachers identified as most time-consuming in practice.

Conceptual mockup of the FAST system. Student code and simulator output (A) are analyzed by the FAST Convening AI. Key segments are excerpted (B) and used to convene teachers and students to troubleshoot around the focal clip (C).

Thus, we hypothesize that a convening AI would accelerate and focus instructional facilitation for open-ended problem solving. We also expect that retained freedom over methods will increase instructor comfort, acceptance, and correct use of the technology; in turn, more students should be helped, in a shorter time; yielding equal or better learning and motivational outcomes.

This material is based upon work supported by the National Science Foundation under Grant Number 2118883.

Intellectual Merit and Broader Impacts

Intellectual Merit

FACILITATE explores an alternative convening approach to the design of AI for educational settings, a significant departure from existing bodies of work such as Intelligent Tutoring Systems – which effectively replace the teacher for routine interactions – and Analytics Dashboards, which inform teachers but do not connect strongly to well-formed and accepted teaching routines. Design-based exploration of this concept also includes an unpacking of, and comparison to, the de facto manual methods teachers to locate such moments today. Research products are expected to include technical papers on the ML implementation, design publications, contextual models of troubleshooting workflow with and without FAST, and exploration of novel concerns and phenomena surfaced through the work.

Broader Impacts

FAST prototypes are expected to directly impact 500 students per year through directly collaborating teachers. A significant number will be minority, low-SES participants in an urban school system. We further anticipate this population will be concentrated in remote and hybrid instruction settings, as FAST and its accompanying toolset are entirely virtual and optimized for low-cost computing hardware such as Chromebooks. Troubleshooting individual student programs is particularly difficult in remote settings, so FAST may be a unique solution, encouraging further adoption.

Indirect impact is expected through use in Robotics Academy professional development activities, which reach approximately 500 teachers per year. Untrained facilitators (e.g. in informal education, or teaching out-of-discipline) may also benefit from FAST’s automatic identification of important areas of student work and solution pathways.

The structure of FAST makes it rapidly portable to any brand of hardware and software, and our lab has done similar work in the past. The convening concept is also generalizable to any content domain with an analogous simulation structure. Design documentation and source code are open-sourced in support of this.

Finally, because convening leaves existing micro-instruction intact, it is fully compatible with ITS systems and other pedagogical enhancements, both technological and non-technological. It can thus be adapted to benefit teachers and learners in many other settings, and potentially accelerate interaction research taking place in those settings by reducing classroom time spent per interaction.

Year 1 Literature Review

- Molly Q Feldman, Yiting Wang, William E. Byrd, François Guimbretière, and Erik Andersen. 2019. Towards answering “Am I on the right track?” automatically using program synthesis. In Proceedings of the 2019 ACM SIGPLAN Symposium on SPLASH-E (SPLASH-E 2019). Association for Computing Machinery, New York, NY, USA, 13–24. https://doi.org/10.1145/3358711.3361626

- Kevin A. Naudé, Jean H. Greyling, Dieter Vogts, Marking student programs using graph similarity, Computers & Education, Volume 54, Issue 2, 2010, Pages 545-561, ISSN 0360-1315, https://doi.org/10.1016/j.compedu.2009.09.005.

- Tiantian Wang, Xiaohong Su, Yuying Wang, Peijun Ma, Semantic similarity-based grading of student programs, Information and Software Technology, Volume 49, Issue 2, 2007, Pages 99-107, ISSN 0950-5849, https://doi.org/10.1016/j.infsof.2006.03.001.

- Chris Parnin and Alessandro Orso. 2011. Are automated debugging techniques actually helping programmers? In Proceedings of the 2011 International Symposium on Software Testing and Analysis (ISSTA '11). Association for Computing Machinery, New York, NY, USA, 199–209. https://doi.org/10.1145/2001420.2001445

- Marcel Böhme, Ezekiel O. Soremekun, Sudipta Chattopadhyay, Emamurho Ugherughe, and Andreas Zeller. 2017. Where is the bug and how is it fixed? An experiment with practitioners. In Proceedings of the 2017 11th Joint Meeting on Foundations of Software Engineering (ESEC/FSE 2017). Association for Computing Machinery, New York, NY, USA, 117–128. https://doi.org/10.1145/3106237.3106255

- Mark Gabel and Zhendong Su. 2010. A study of the uniqueness of source code. In Proceedings of the eighteenth ACM SIGSOFT international symposium on Foundations of software engineering (FSE '10). Association for Computing Machinery, New York, NY, USA, 147–156. https://doi.org/10.1145/1882291.1882315

- Miltiadis Allamanis, Earl T. Barr, Premkumar Devanbu, and Charles Sutton. A survey of machine learning for big code and naturalness. ACM Computing Surveys (CSUR), 51(4):81, 2018.

- Fried, D., Hu, R., Cirik, V., Rohrbach, A., Andreas, J., Morency, L. P., ... & Darrell, T. (2018). Speaker-follower models for vision-and-language navigation. Advances in Neural Information Processing Systems, 31. https://arxiv.org/pdf/1806.02724.pdf

- Hu, H., Yarats, D., Gong, Q., Tian, Y., & Lewis, M. (2019). Hierarchical decision making by generating and following natural language instructions. Advances in neural information processing systems, 32. https://arxiv.org/pdf/1906.00744.pdf

- Wang, S., Montgomery, C., Orbay, J., Birodkar, V., Faust, A., Gur, I., ... & Anderson, P. (2022). Less is More: Generating Grounded Navigation Instructions from Landmarks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 15428-15438). https://arxiv.org/pdf/2111.12872.pdf

- Lin, B. Y., Zhou, W., Shen, M., Zhou, P., Bhagavatula, C., Choi, Y., & Ren, X. (2019). CommonGen: A constrained text generation challenge for generative commonsense reasoning. arXiv preprint arXiv:1911.03705 https://arxiv.org/pdf/1911.03705.pdf

Year 1 Interface Mockups

To create a tool that successfully convenes teachers and their students at key troubleshooting opportunities, the research team iterated through and tested a variety of user interface designs.

- Teacher Mockup 1 (Simulation Collision Markers)

- Teacher Mockup 2 (Marks Potentially Buggy Line without Explanation)

- Teacher Mockup 3 (Frame-by-Frame Debugger)

- Teacher Mockup 4 (Teacher Dashboard with Suggestions)

- Teacher Mockup 5 (Teacher Dashboard with Correct Solution)

- Teacher Mockup 6 (Classroom Progress Heatmap)

- Student Mockup 1 (Program Execution Tracing with Contextual Suggestions)

- Student Mockup 2 (Program Execution Tracing with Contextual Suggestions)

Lessons learned:

- The timing of interface notifications and the framing of information are equally critical in the goal of convening the teacher and student.

- Teacher-side only interfaces are very likely to fail; no observed teachers stay at their computers waiting to be instructed to help the student.

Year 1 Data Collection and Analysis

Classroom Observation Data and Analysis

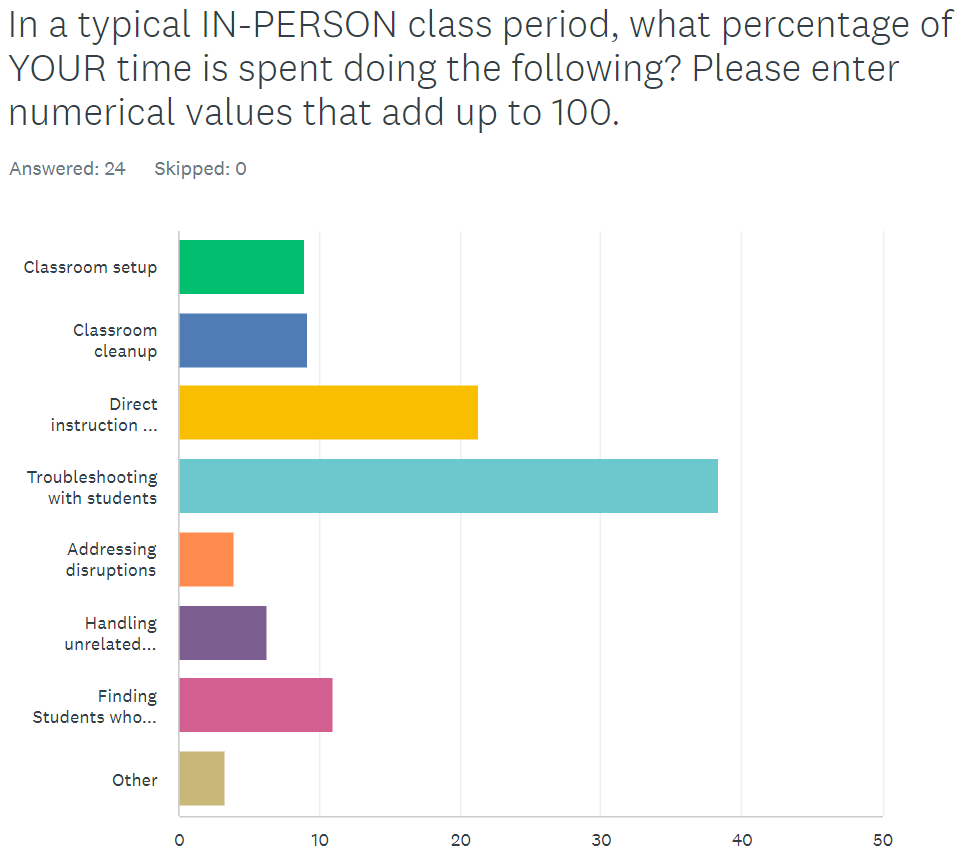

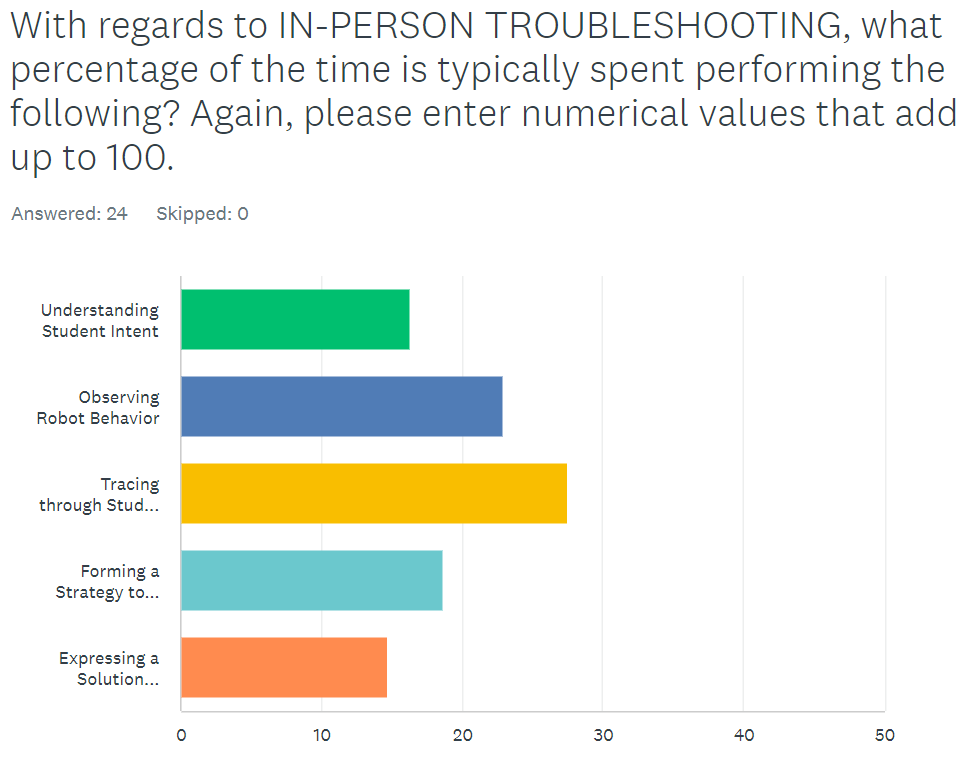

Prior to the start of the project, the research team surveyed teachers to better understand how time was spent in robotics classrooms. Teachers self-reported that almost 40% of classtime was spent troubleshooting, mostly spent tracing through student code.

|

|

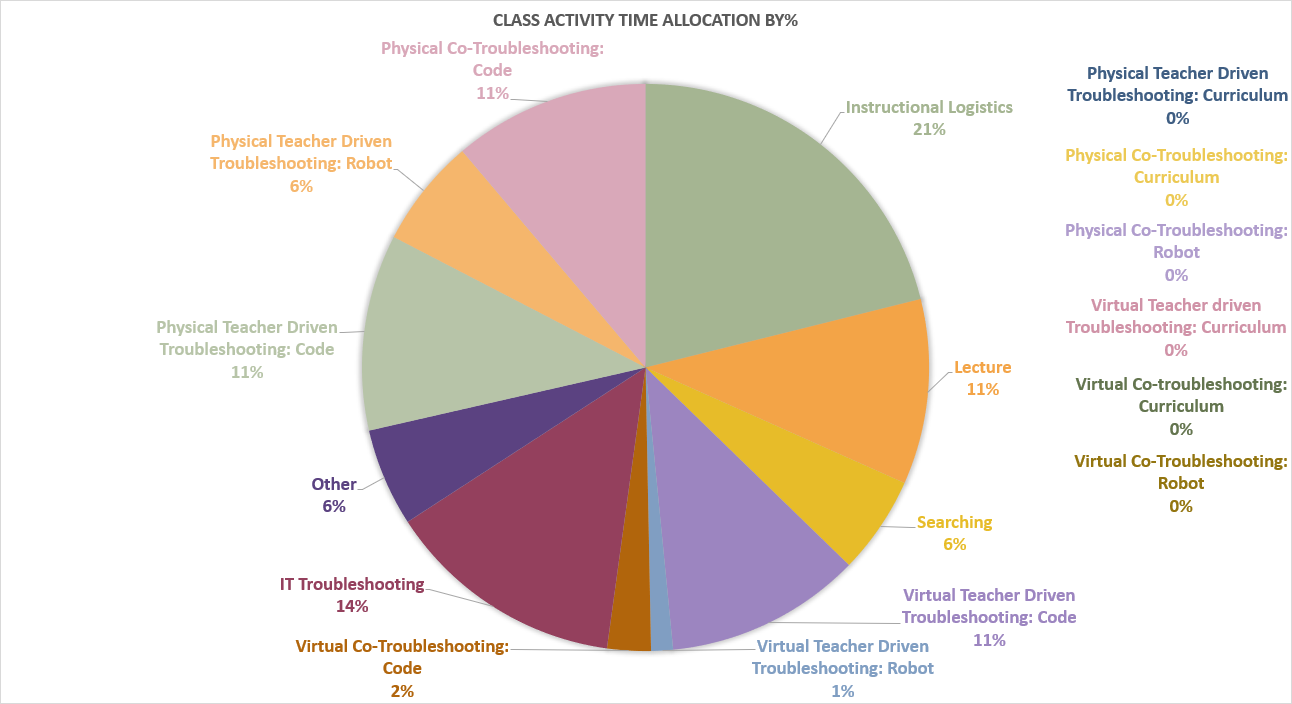

To better undestand these behaviors, the research team performed in-person observations of teachers in their typical robotics classes, with additional classifications for and attention to types of troubleshooting. In the sample below, we see that 56% of the time is spent in some form of troubleshooting, 32% is spent in a form of direct instruction, and 6% is spent searching for students in need of support.

Virtual Curriculum Data and Analysis

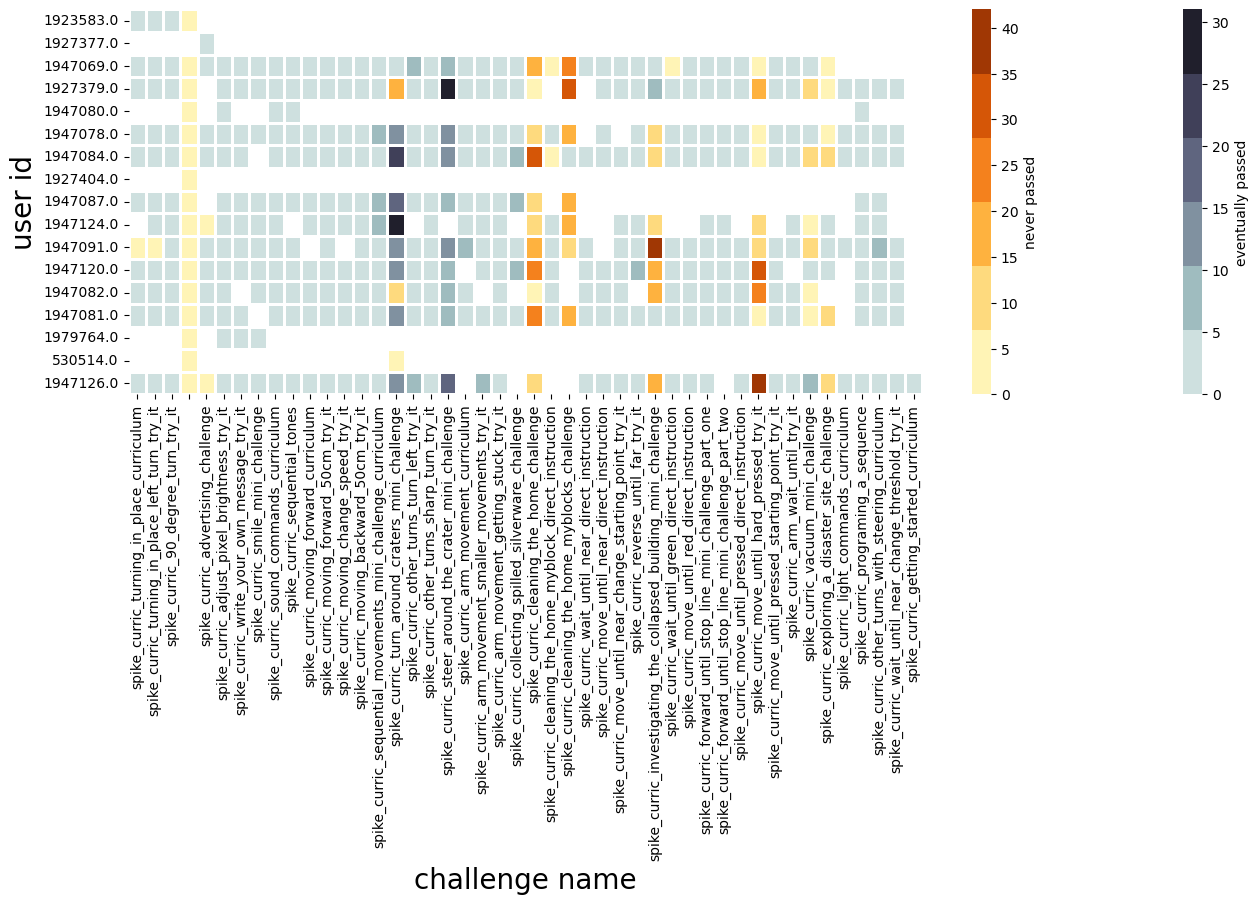

The research team has instrumented the virtual robot simulation environment to log telemetry data and key events (checkpoints, pass, fail) as well as the user programming interface to to track code changes. Data from 894 sessions was logged through a summer cohort, allowing the research team to grow a dataset of successful and failed program attempts. This initial dataset is presented below as a heatmap.

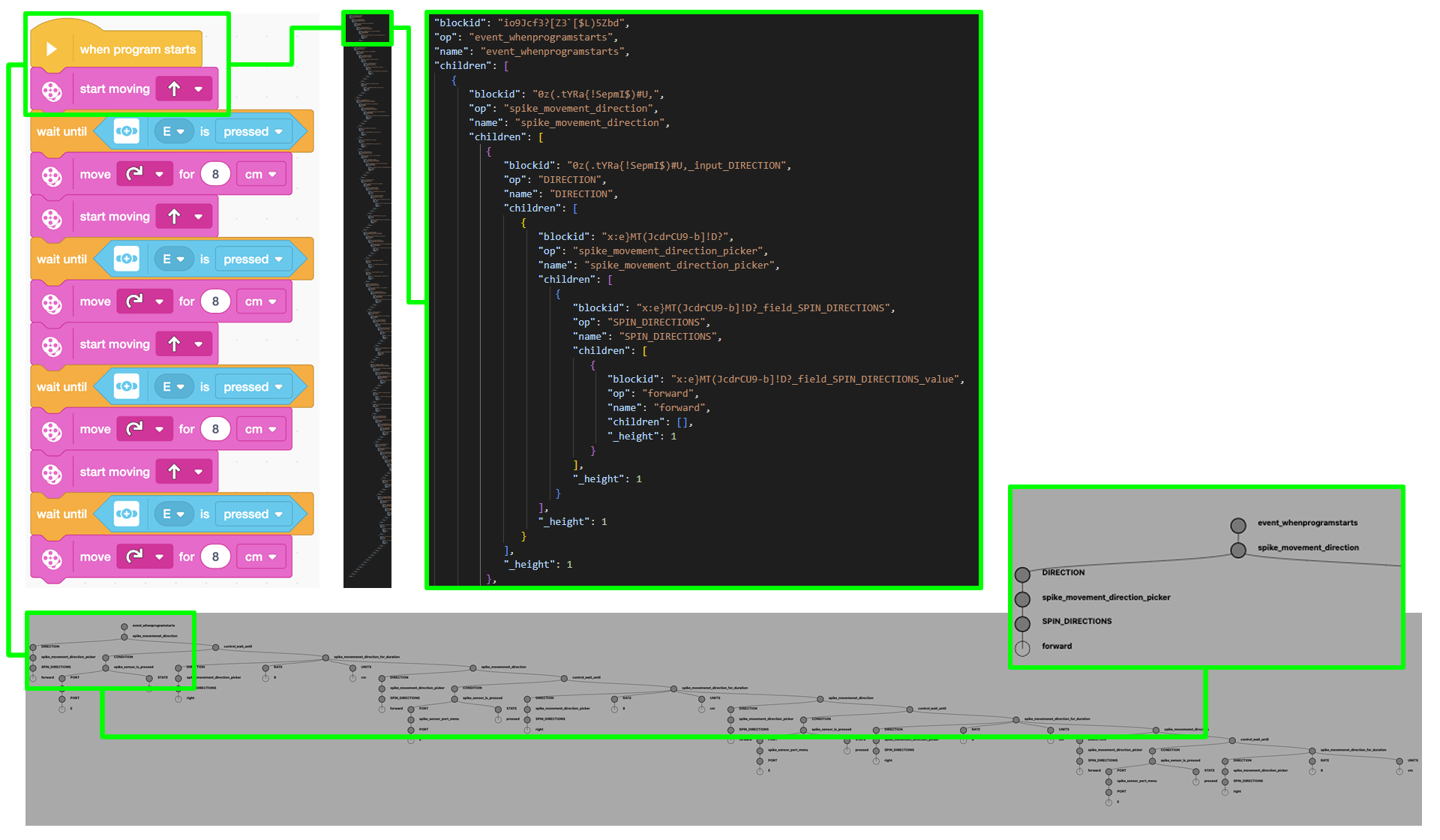

Continued Analysis

The student programming environment consists of graphical "word blocks" that reduce syntax errors compared to text-based languages. These graphical word blocks abstract complex and verbose JSON data. To understand and quantify the types of logical errors students make in their programs, the research team is parsing the JSON data from student solutions into tree visualizations along with an analysis of the number of word block additions, substitutions, adaptations and transpositions from known "ideal" solutions.